|

We developed a team for the F180 RoboCup league, the FU-Fighters, that has taken part in the competitions held at Stockholm and Melbourne. To make the robots more autonomous, we replaced the global vision system by an omnidirectional local vision system where each robot carries its own camera.

Three tasks have to be accomplished by the computer vision software that analyzes the captured video stream: detecting the ball, localizing the robot, and detecting obstacles. These tasks are non-trivial, since sensor noise and variances, such as inhomogeneous lighting, are present in the images. The image analysis must be done in real time, which is not easy, due to the enormous data rate of video streams. Some teams need to reduce frame rate or resolution to match the available computing power, however, such an approach leads to less precise or less timely estimates of the game status. To be useful for behavior control, the system also needs to be robust. Unexpected situations should not lead to failure, but to graceful degradation of the system's performance.

Local vision is the method used by most teams in the F2000 league as the main sensor. Some of the successful teams adapted the omnidirectional vision approach. The Golem team [4] impressively demonstrated in Melbourne that, using an omnidirectional camera, sufficient information for controlled play can be collected. Another example for the use of omnidirectional cameras is the goalie of the ART team [3,6,7]. In our league, F180, only three teams tried to play in Melbourne with local vision. In the smaller form factor the implementation of a local vision system is more challenging than in the F2000 league. Due to space and energy constraints smaller cameras of lower quality must be used and less computing power is available on the robot. Recently, the OMNI team [5] demonstrated controlled play with omnidirectional local vision at the Japan Open competition. This team sends the video stream to an off-the-field computer that contains special purpose hardware for image processing.

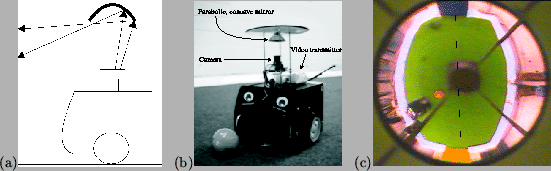

We use a similar system design and mount a small video camera and a mirror on top of the robots, as shown in Fig. 1. The camera is directed upwards and looks into a parabolic mirror. The mirror collects light rays from all directions, reflecting them into the camera. The parabolic shape of the mirror produces less distortions, as compared to a spherical mirror. Far-away objects appear larger and are hence easier to detect [1]. The optical mapping preserves the angle of an object to the perception origin. The non-linear, increasing distance function of the camera can be calibrated easily by measuring distances in the image and in the world. To avoid carrying a large computer on the robots, the video stream is transmitted to an external computer via an analog radio link.

The main idea of the paper is to implement a tracking system for the analysis of the video stream produced by an omnidirectional camera that needs to inspect only a small fraction of the incoming data. This allows to run the system at full frame rate and full resolution on a standard PC. For automatic initialization of the tracking, an initial search analyzes the entire video frame.

The paper is organized as follows: The next section describes the initial localization of the robot, the ball and obstacles. The tracking system is described in Section 2. Some experimental results are reported at the end.